Abstract

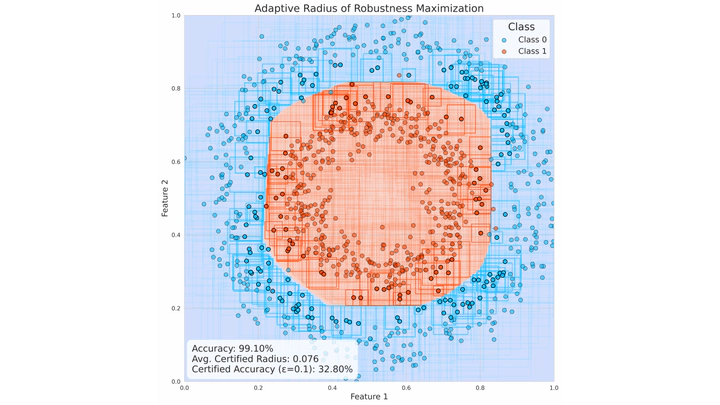

As deep learning models increasingly find application in real-world systems, ensuring their robustness against adversarial attacks remains a significant security challenge. Formal verification techniques can provide worst-case performance guarantees for these models. However, enhancing verifiable robustness certificates typically requires the use of specialized training algorithms, known as certified training. A major drawback of existing certified training methods is a substantial reduction in performance on clean (non-adversarial) data, a phenomenon known as the accuracy-robustness tradeoff. In our work, we tackle this issue by proposing an Adaptive Certified Training algorithm, which achieves more favorable accuracy-robustness tradeoff curves. To achieve this, we first efficiently calculate the robustness radii of each data point and then optimize the adaptive robust loss at these radii. Consequently, we demonstrate improved standard performance of robust models with verifiable guarantees on image classification benchmarks of varying difficulty.