Abstract

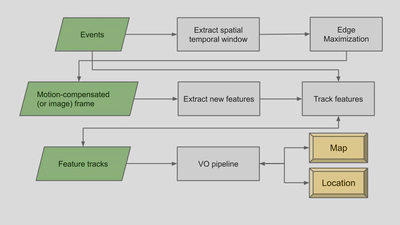

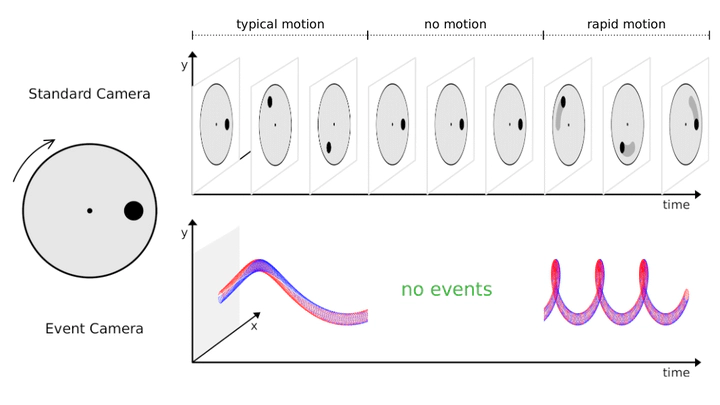

Event cameras are the new type of the differential sensors allowing to capture the change of the contrast in each pixel asynchronously at the high dynamic range and high-speed motion. In this work, we investigate the capability of the event-based methods in the problem of visual odometry. We implement 3 independent algorithms: 1) Event-based Feature Tracker; 2) Monocular Visual Odometry based on feature tracks; 3) Motion Compensation of event images. Moreover, we introduce a new loss function for Unsupervised Motion Compensation called Edge Maximization. Finally, we combine all the algorithms into Fully Event-Inspired Visual Odometry. To the best of our knowledge, this is the first attempt to build the Visual Odometry exploiting only Events. In the end, we provide a comparison with the existing event-based Visual Odometry and tracking frameworks.